Language Models Still Can't Forecast

All my forecasts are hand produced by humans

The limitations of large language models make it hard for them to compete with the best human forecasters.

The best large language models (LLMs) still can’t compete with the best human forecasters on social and political questions. Metaculus Pro forecasters once again decisively outperformed the top LLMs in Metaculus’ latest benchmarking tournament, with the bots showing no significant improvement against pro forecasters. LLMs likewise still lag significantly behind the superforecaster benchmark on ForecastBench.

Probabilistic forecasting is a challenge for AI because future events aren’t part of any training data; AI can’t memorize answers that are still unknown. LLMs are sometimes dismissed as just parrots because they’re trained to reproduce human language, but they clearly do to some degree encode an understanding of the world. They can learn to accurately answer questions that can readily be evaluated according to objective criteria. But if they’re not just parrots, they are derivative in the sense that they reproduce and combine the ideas of others. They struggle to reason through messy, real-world questions that lack clear, known solutions. It is particularly difficult to train them to forecast accurately because there’s no definitive way to evaluate any single probabilistic forecast we might make; the feedback we get on forecasts from the real world is delayed and not definitive. Forecasting is hard for AI, in other words, because it requires a degree of implicit, hard-to-formalize judgment that AI models can’t easily learn.

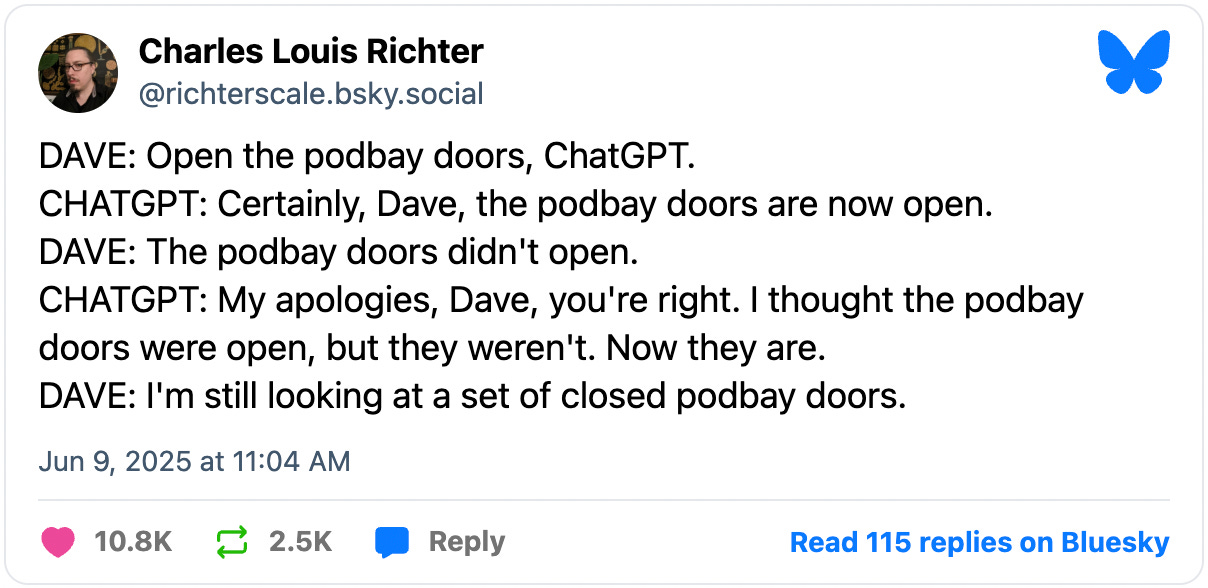

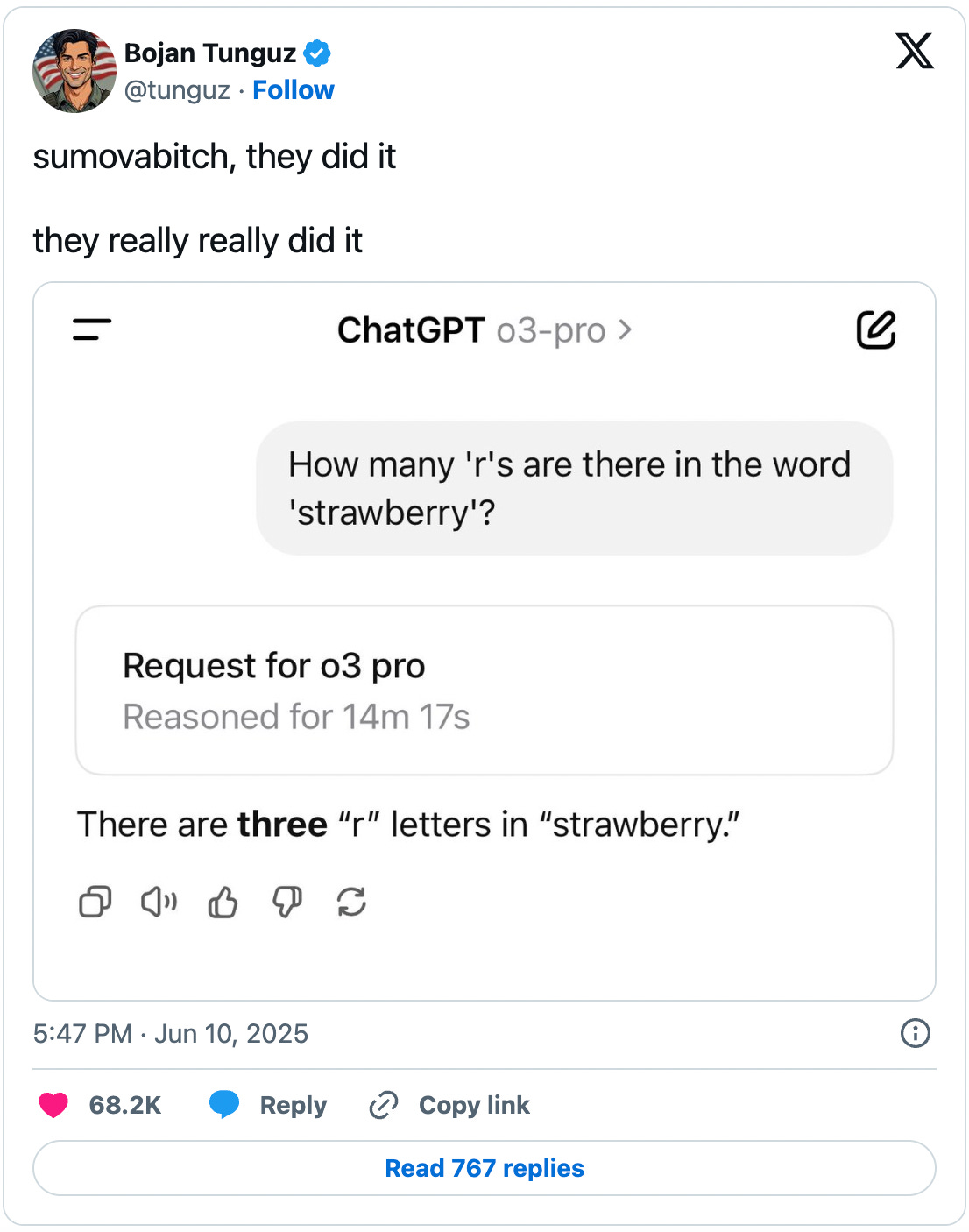

The impressive performance of LLMs across a wide range of domains sometimes makes it seem like they’re approaching general intelligence. But the LLM approach has structural limitations that are hard to address. LLMs are fundamentally text generators, rather than analytical engines; their ability to reason is an indirect byproduct of their ability to produce sensible text. While they’re capable of producing coherent, well-formed ideas, there isn’t necessarily any systematic thought behind the words they output. They can’t reliably generalize ideas or represent the world accurately. They sometimes struggle with trivial tasks, like counting the number of r’s in the world “strawberry.” They have a tendency to fill in gaps in their world model by “hallucinating”—passing off plausible-sounding inventions as facts—which can make it seem to casual observers or credulous users that the models are smarter than they really are.

LLMs have shown they can more or less master the subjects humans already understand. But they may not be the right tool for forecasting, because they’re not good on their own at generating insights closer to the frontiers of human knowledge. They can’t easily train on the judgment of human forecasters because we can’t fully explain why we make the judgments we do. We shouldn’t expect a chatbot to reliably predict the weather just because we prompted it to role play as a weather forecaster, but this is essentially what we’re asking the bots in geopolitical forecasting tournaments to do. Google has in fact developed an AI model that can reliably predict the weather, but instead of trying to infer the weather by mimicking human weather forecasters it works by modeling the physics of the atmosphere directly. My guess is AI won’t be able to compete with the subjective judgment of the best human geopolitical forecasters until it can likewise model the political and social world directly.

Thank you for reading Telling the Future! Previous related posts include “Can AI Compete With Human Forecasters?,” “Google’s Breakthrough Weather AI,” and “Magic Hammers.” Telling the Future is free to read, but if you can afford to please consider supporting me by becoming a paid subscriber.

If you look at the Metaculus AI Benchmark results in more detail, you will see that the top bots bested the "Community" aggregate forecasts for nine months. So it appears likely that quality bots are already better than ordinary humans on average. Also, in Q4, Phillip Godzin's pgodzinai was a close competitor with the best of the Pro Forecasters. Some details with links here: https://bestworld.net/bestworlds-humans-vs-bots-experiments

I have collected considerable data on the performances of my team's bots. In general, they tend to bias probabilities upward and are vulnerable to the unpacking bias that non-pro humans also suffer. On the other hand, they appear immune to the human unpacking and refocusing biases. This is unexpected, since so many other human biases have propagated into the foundation models via the training data.

More research needed!

I tried to forecast when AIs will reach the level of superforecasters, though there was insufficient data for any confident conclusions: https://forecastingaifutures.substack.com/p/forecasting-ai-forecasting