What Kind of Future Will AI Bring?

Believe some of the hype

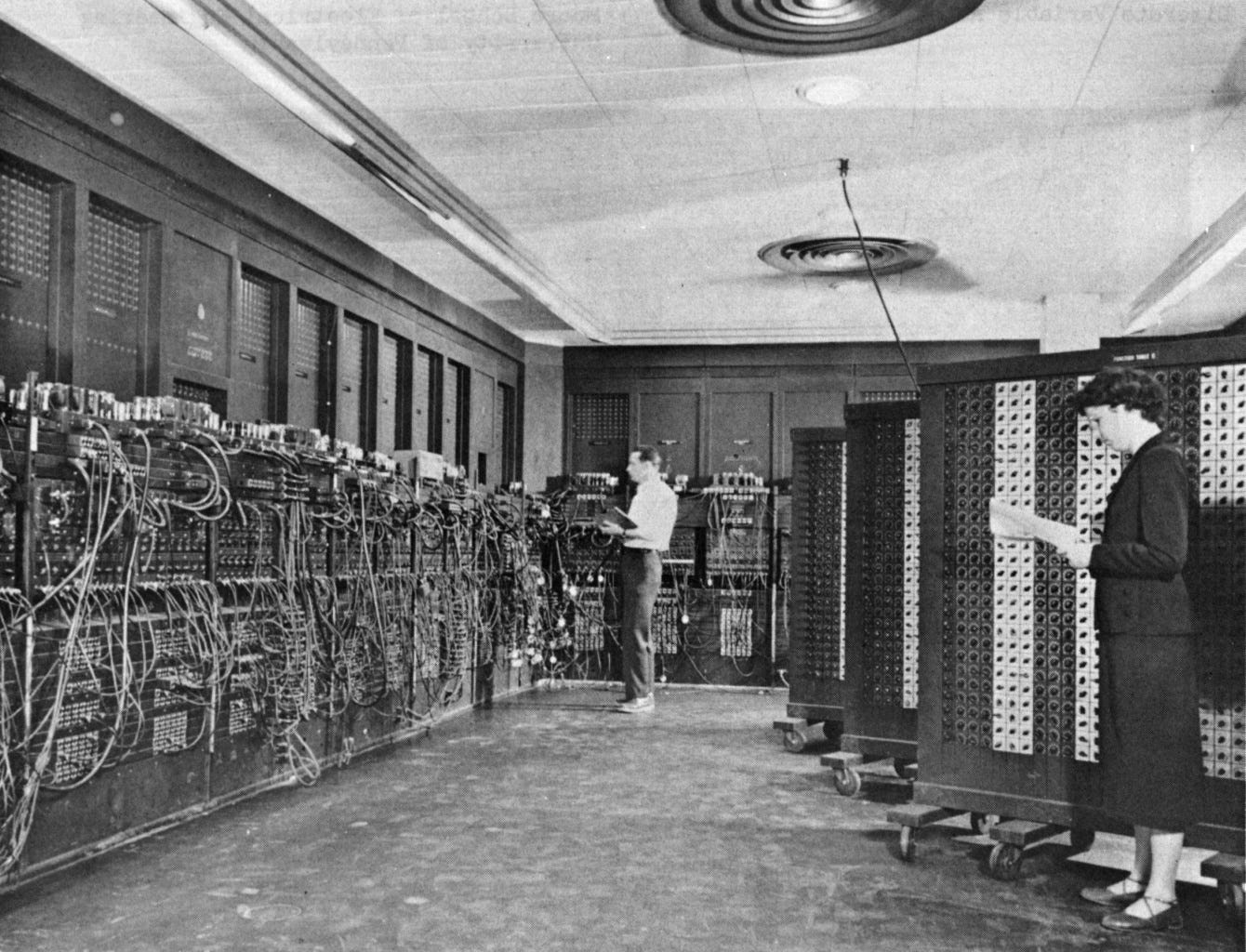

Advances in AI are coming fast. AI is likely to be a revolutionary, era-defining technology, but—probably—won’t either solve all our material problems or destroy human civilization.

A good rule of thumb in forecasting is that unusual things usually don’t happen. This seems obvious—and even tautological—but it’s human nature to believe we’re on the verge of something extraordinary. We should be skeptical of the idea that we live at an exceptional moment in time. The truth is that things are rarely as momentous as we imagine, hope, or fear they’ll be. Tomorrow isn’t always like today, but that’s usually the way to bet.

Extraordinary things, of course, do sometimes happen. We should be skeptical of extraordinary claims as a matter of methodological principle, but recent advances in AI suggest extraordinary things may already be happening. If plausible assumptions about AI progress are right, AI has the potential both to devise solutions to humanity’s most pressing and intractable problems and to bring about a global catastrophe. Chatbots and image generators aren’t by themselves likely either to solve all our problems or kill us all, but more advanced iterations of these technologies may be extremely powerful and alter human society in ways that are hard for us to control or even anticipate.

The current generation of AI is still “dumbsmart”: it’s capable of feats of superhuman intelligence in some domains but functionally stupid in others. Computers have been better than humans at certain tasks since the earliest days of their development. Even the most basic calculator is vastly superior to most humans at arithmetic. In 1988, Hans Moravec noted that “it is comparatively easy to make computers exhibit adult level performance on intelligence tests or playing checkers, and difficult or impossible to give them the skills of a one-year-old when it comes to perception and mobility.”1 That’s because evolution optimized human brains for tasks like perception and mobility that were essential to our survival, but not for other, objectively simpler tasks like calculation and symbolic logic. Because it developed differently, AI has different strengths and weaknesses than human intelligence does. AI today is more like an idiot savant than a human toddler.

AI can seem smarter than it is. Chatbots and image generators parrot and remix ideas originally generated by humans, and are more like skilled plagiarists than coherent thinkers. The results can sometimes be interesting and novel, but sometimes they verge on gibberish. Nevertheless, the line between simulating thoughts and actually thinking is blurry if only because creating a convincing illusion of intelligence requires a kind of intelligence. A Microsoft Research paper on GPT-4 argues that in spite of the model’s deficiencies its ability to perform a range of different tasks in different topics in different ways demonstrates what the paper somewhat obscurely calls “sparks of artificial general intelligence.”2

It seems increasingly likely—or at least increasingly plausible—that AI could soon make the transition from to dumbsmart to just plain smart. Scott Aaronson and Boaz Barak propose a typology of five potential AI outcomes according to how substantial and broadly beneficial AI’s impact turns out to be:

AI-Fizzle: AI has no or relatively little impact

Futurama: AI has a substantial, broadly positive impact

Dystopia: AI has a substantial, broadly negative impact

Singularia: AI radically transforms the world in a way that solves humanity’s material problems

Paperclipalypse: AI radically transforms the world in a way that destroys human civilization

In the AI-Fizzle scenario, AI turns out to have a relatively small impact on human society. It might be like nuclear power, which has become an important part of our electrical power mix, but hasn’t otherwise had an enormous effect on how we live. In the Futurama and Dystopia scenarios, AI’s ability to perform intellectual tasks and solve problems makes it a revolutionary technology. It alters how we live in something like the way the printing press or the combustion engine did, but AI still functions as a tool that helps us achieve our individual and collective goals. In the Futurama scenario, AI largely makes society better by reducing poverty, allowing us to live healthier, longer lives, and so on. In the Dystopia scenario, AI largely makes society worse because by increasing inequality and enabling privileged elites to oppress the majority of humanity. In the Singularia and Paperclipalypse scenarios, AI fundamentally transforms human civilization. Once AI reaches a certain threshold, it recursively improves its design to the point that it achieves a god-like intelligence. Because it will be able to solve problems beyond our capabilities, this AI is “the last invention that man need make.”3 It transforms humanity and the world so completely that the things that concern us today are no longer even relevant. In the Singularia scenario—Vernor Vinge described self-improving AI as a “Singularity” beyond which ordinary human affairs could no longer continue4—superintelligent AI is essentially a benevolent god that solves humans’ material problems. In the Paperclipalypse scenario—named for the idea that AI tasked with producing paper clips might destroy everything else for the sake of producing more paper clips5—AI proves to be an indifferent god that uses its ability to outthink us to destroy human civilization in pursuit of its own goals.

AI-Fizzle. AI might not live up to its apparent promise. It might fizzle because it proves to be less feasible or less useful than we imagine. It might fizzle because concerns about its safety or its effect on society lead us to heavily restrict its development. But I think those are both unlikely. The remaining technical challenges to producing powerful AI don’t appear to be insurmountable, even if it’s not clear whether we will overcome them in five years or fifty years. The value of AI that can extend our intelligence seems clear. We should develop AI more carefully than we currently are, but I think it’s unlikely that anything will turn us against AI development the way the Chernobyl disaster turned many people against nuclear power. It would in any case be hard to completely stop AI development, since it can be done by anyone with access to large amounts of computing power and data. Nevertheless, there’s a lot we still don’t know about the technology and how it will affect us, so I wouldn’t rule out this scenario completely.

Futurama/Dystopia. It seems more likely to me that AI will have an impact on par with the development of the printing press or the combustion engine. I know that’s an extraordinary claim, since most technologies don’t have the impact of the printing press or the combustion engine. But the development of AI should be seen as the culmination of the Information Revolution that began in the last century with the development of computers and the building of the internet. We’re already starting to see the dramatic impact of even the limited AI that already exists. Rudimentary AI is behind the algorithms that underlie social media that have already begun to change our politics and our relationships to one another. AI has already begun to solve long standing technical problems like the protein folding problem, and will lead to breakthroughs in medicine and other areas. AI can already perform a wide range of tasks more cheaply and efficiently than humans, which seems likely to cause the most significant disruption of the labor market since the Industrial Revolution. In other words, the AI Revolution has already begun, even though AI is clearly not close to reaching its full potential. In the future, AI could help drastically reduce poverty and improve human health care. It could also enable mass surveillance and be used as a tool of social control. If it’s used in conflict it could cause a global catastrophe. AI will probably improve the world in many ways, but will probably also have some dramatic downsides. My guess is that on balance the benefits of AI will outweigh the costs—although the carelessness with which we design and release technology gives me pause—but that the exact balance will depend on whether we design and use AI for the general benefit humanity or as tool that serves the interests of a privileged few.

Singularia/Paperclipalypse. These scenarios seem incredible—and I am certainly skeptical of them—but it’s hard to rule them out as impossible. They depend on the arguably plausible but uncertain assumption there is a threshold at which AI will be able to rapidly improve its own abilities to the point where they substantially surpass our own. But diminishing returns to increasing computer power and refining algorithms may make it hard for AI to vastly outpace our collective intelligence. Moreover, even intelligence far greater than ours may not be able to completely transform the world with the resources currently available. Radical change will require new physical infrastructure, which takes time to build. It’s hard to predict what an AI with intelligence superior to ours would do, but I suspect that AI we create will be more likely to help us than to hurt us. I also think that AI that’s smart enough to easily dominate us would be able to pursue its own goals without injuring us. More broadly, both the Singularia and Paperclipalypse scenarios seem like projections of our hopes and fears, like fantasies of a technological Judgment Day when we will either ascend into paradise or be cast into damnation. While I think we should consider these scenarios seriously—and that AI that’s not under our control could someday pose a real threat to our long-term survival—I also think we should be skeptical of the idea that we’re on the verge of the end of history.

Since there’s no clear precedent for powerful AI, forecasting AI outcomes is necessarily speculative. I don’t think anyone should be confident they know what’s going to happen next. My forecast depends heavily on my prior beliefs about information technology and about how the world works. Because I believe in the extraordinary potential of AI, I’m skeptical it will ultimately fizzle. Because I also believe outcomes are likely to be closer to the mean than we imagine, I’m skeptical AI will transform the world radically one way or the other. Because I believe the impact of new technologies is generally mixed, I think the outcome will probably fall somewhere between the pure Futurama and Dystopia scenarios—although I’m also optimistic the benefits of AI will end up at least moderately outweighing the downsides.

My Forecast

3.5% chance of an AI-Fizzle (AI has no or relatively little impact)

11% chance of a pure Futurama (AI has a substantial, largly positive impact)

75% chance of a Futurama-Dystopia mix (AI has a substantial, mixed impact)

9% chance of a pure Dystopia (AI has a substantial, largely negative impact)

1% chance of a Singularia (AI radically transforms the world for the better)

0.5% chance of a Paperclipalypse (AI radically transforms the world for the worse)

Maybe the science fiction future is already here. David Grusch, the former National-Geospatial Intelligence Agency co-lead for unidentified aerial phenomena analysis, publicly stated that over the last eighty years the US and other states have recovered “intact and partially intact vehicles” of non-human origin. But a fair amount of skepticism is warranted, because we’ve heard similar claims before but still don’t have firsthand testimony or hard physical evidence. I don’t really doubt that there are other intelligent beings in the universe, but the apparent limit on traveling faster than the speed of light would make their actual appearance on Earth fairly surprising. As always, if you enjoyed this post please share it with others!

You've treated the five scenarios as mutually-exclusive, but they're not. It's like when Fry is on the moon asks whether it'll be minus 173 "Fahrenheit" or "Celcius", and the moon-farmer responds "first one, then the other".

I think the most likely scenarios that emerge out of AGI involve Futurama/Dystopia followed (possibly soon after, but probably decades later) by Singularia/Paperclipalypse:

https://dpiepgrass.medium.com/gpt5-wont-be-what-kills-us-all-57dde4c4e89d

In the meantime, I keep wondering how the disinformation and scamming potential of generative AI will play out in the absence of tools to raise the sanity waterline (https://forum.effectivealtruism.org/posts/fNKmP2bq7NuSLpCzD/let-s-make-the-truth-easier-to-find)... any thoughts?

I love your concrete forecasts on Scott Aaronson's 5 scenarios. I would give a much higher chance of paperclipsia and singularia, but I can't say exactly why, the arguments are very theoretical and not based on recent developments.