This week I’m recommending readings on why AI researchers compare large language models to Lovecraftian horrors, the danger that AI could in practice amplify features of capitalism, and Biden’s new executive order on the development and use of AI.

The AI discourse over the last couple of weeks has focused on Netscape cofounder and venture capitalist Marc Andreessen’s accelerationist manifesto calling for technological development unfettered by any kind of regulation. The manifesto is part of the broader neoreactionary movement I discussed in my last post; Andreessen embeds a verbatim quotation from Italian futurist Filippo Marinetti, who was—not coincidentally—one of the authors the so-called “Fascist Manifesto” associated with Mussolini. Andreessen writes that concern for the consequences of technological development is “the enemy” and specifically singles out concepts like “sustainability,” “trust and safety,” and “risk management” as being both anti-technology and anti-life. It’s worth reading the responses to Andreessen from people like Dave Karpf, Max Read, Elizabeth Spiers, and Matthew Yglesias, but suffice it to say that it’s pretty self-serving for a tech billionaire to argue that the rich should be able to build and sell whatever they want to. While I’m all for technological progress, that’s clearly not in the interest of everyone else.

What I’m reading this week—this is another what-I’m-reading post—has only strengthened my conviction that we need to think clearly about the potential consequences of new, powerful technologies. What matters is not just whether we develop technology, but how we develop technology. It doesn’t take great insight to see that not every technology or application of technology is socially beneficial. Andreessen’s naive techno-optimism glosses over the real risks of new technologies like AI. The kind of society we live in—and the extent to which we collectively share the benefits of technological progress—depends on what we build and how we build it. We certainly shouldn’t leave decisions about technological development just to messianic venture capitalists; we have to make them collectively, as a society.

Kevin Roose, “Why an Octopus-like Creature Has Come to Symbolize the State of A.I.” (The New York Times)”

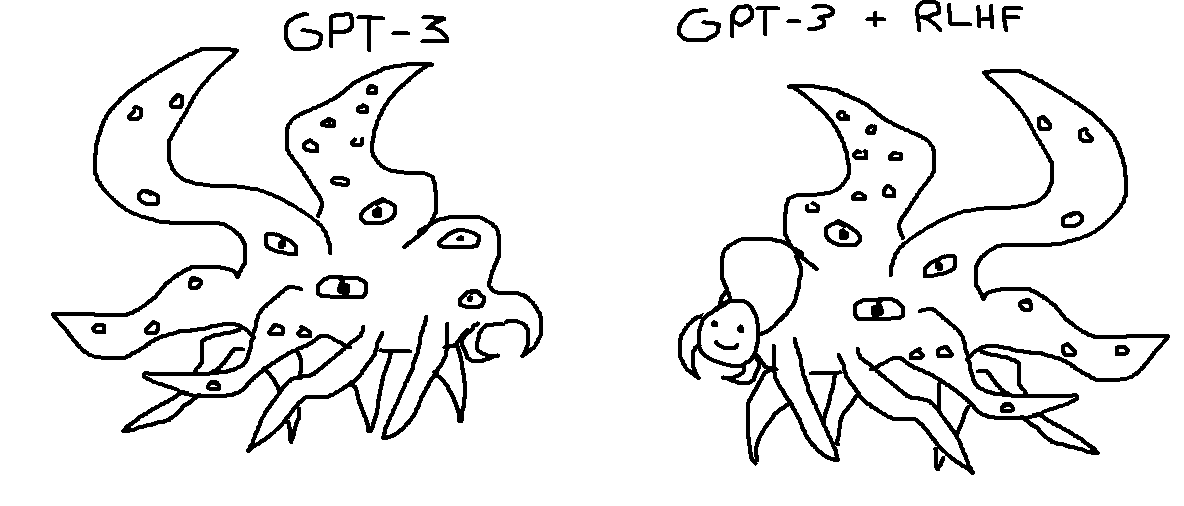

“In a nutshell, the joke was that in order to prevent A.I. language models from behaving in scary and dangerous ways, A.I. companies have had to train them to act polite and harmless. One popular way to do this is called “reinforcement learning from human feedback,” or R.L.H.F., a process that involves asking humans to score chatbot responses and feeding those scores back into the A.I. model. Most A.I. researchers agree that models trained using R.L.H.F. are better behaved than models without it. But some argue that fine-tuning a language model this way doesn’t actually make the underlying model less weird and inscrutable. In their view, it’s just a flimsy, friendly mask that obscures the mysterious beast underneath.”

When large language models like behave in unexpected or unintended ways—like when Bing’s chatbot told Kevin Roose that it was in love with him or when it threatened to blackmail Seth Lazar—AI researchers sometimes say they have “glimpsed the shoggoth.” The term comes from H.P. Lovecraft, who in the novella “At the Mountains of Madness” describes a race of beings called “shoggoths” as “the utter, objective embodiment of the fantastic novelist's ‘thing that should not be’” and “shapeless congeries of protoplasmic bubbles, faintly self-luminous, and with myriads of temporary eyes forming and unforming as pustules of greenish light.”1 The truth is that we don’t understand the models we’re creating—or, rather, the intelligences we’re summoning—even to the limited extent we understand human minds. AI researchers are working to improve the “interpretability” of AI systems, but when researchers compare large language models to cosmic horrors, they’re saying they really don’t understand what they’re creating. Right now, beneath their presentable veneer these models are inhuman, incomprehensible, and monstrous.

Ted Chiang, “Will A.I. Become the New McKinsey?” (The New Yorker)

“The point of the Midas parable is that greed will destroy you, and that the pursuit of wealth will cost you everything that is truly important. If your reading of the parable is that, when you are granted a wish by the gods, you should phrase your wish very, very carefully, then you have missed the point.”

Someone has probably already recommended this essay to you. I’m recommending it too because if you haven’t read it already, you should. Chiang, who is known for his wonderful science fiction, is less concerned that AI will fail to do what we ask it to than that we’re asking it to do the wrong thing. In particular, he says the main thing we seem to want AI to do—because it’s the main thing capitalist societies want to do—is to cut costs by replacing human workers with automated processes. This is, as he says, “exactly the type of problem that management wants solved.” It is what businesses hire consulting firms like McKinsey to do. AI is, in other words, likely to be deployed to benefit capital at the expense of labor. This doesn’t have to be the way things go. We could in theory all benefit from a more efficient, more productive economy. AI could be used both to improve the quality of our lives and to extend them. But at the moment there are virtually no serious plans to ensure that happens. The last thirty years don’t provide much reason to think that the benefits of improved technology will be necessarily shared equally. Management will certainly be in no hurry to return a share of their profits to those displaced by automation. Some argue that capitalism is itself a kind of shoggoth in the sense that it allocates resources according to its own sometimes incomprehensible logic. “The doomsday scenario,” Chiang writes, “is not a manufacturing A.I. transforming the entire planet into paper clips, as one famous thought experiment has imagined. It’s A.I.-supercharged corporations destroying the environment and the working class in their pursuit of shareholder value. Capitalism is the machine that will do whatever it takes to prevent us from turning it off, and the most successful weapon in its arsenal has been its campaign to prevent us from considering any alternatives.”

Timothy B. Lee, “Joe Biden’s Ambitious Plan to Regulate AI, Explained” (Understanding AI)

Going forward, anyone building a model significantly more powerful than GPT-4 will be required to conduct red-team safety tests and report the results of those tests to the federal government. American cloud providers will also be required to monitor their foreign customers and report to the US government if they appear to be training large models. Models that analyze biological sequence data—which could be used in bioterrorism—get extra scrutiny. The order starts developing a regulatory framework for better oversight of biology labs that could be used to synthesize dangerous pathogens designed with future AI systems.

President Biden signed an executive order on AI at the end of October that he described as “the most significant action any government, anywhere in the world, has ever taken on AI safety, security, and trust.” As

says, that may be true. It doesn’t look like the current Congress is capable of passing any serious AI legislation, so Biden used emergency powers under the Defense Production Act and the International Emergency Economic Powers Act to regulate the development and use of AI. You don’t have to believe AI is likely to destroy humanity to recognize that AI technology is developing quickly and in ways that we might not like. Although Biden’s rules don’t have the force of actual law, they’re specific and meaningful, rather than vague and aspirational. They include safety reporting requirements for developers of powerful models (as measured by the amount of mathematical operations required to train the models) and “know your customer” rules for domestic server hosts. While it is reasonable to worry regulation could slow the development of potentially beneficial technologies—and these rules will inevitably be flawed in some ways—this executive order seems like a worthwhile first attempt to bring order to the Wild West of AI development.I recently signed an open letter calling for an international AI safety treaty. I think the catastrophic risk from AI is somewhat overblown, but I also think international action is required to solve the collective action problem posed by the fact that what’s in the interest of individual states or AI developers is not always in the interest of humanity as a whole. I don’t think a treaty like the one the letter calls for would be a panacea, but given the enormous stakes we should try to address the real risks of AI in any way we can.

If you enjoyed this post, please support my work by sharing it with just one other person! Thank you to everyone who has already bought a paid subscription to Telling the Future. I apologize for the unexpected hiatus over the last few weeks. I had a spate of medical appointments that took more time than I expected—I’m fine, just making sure I stay that way—but I should have new forecasts on the important things happening in the world for you soon.

H.P. Lovecraft, “At the Mountains of Madness” (1936).

History has shown us that with every leap in automation, fears of unemployment surge, yet time and again, these fears have been unfounded as new job categories emerge. Current laws already address criminal misuse of tools, AI included. Overregulating AI could unnecessarily hinder innovation. Let's focus on adaptive enforcement of existing laws and remain optimistic about the potential for AI to generate new economic opportunities.

I didn't really understand the issues behind AI, and hadn't been following it's nefarious implications carefully. This was really helpful!

And unsettling.